| Exam Year | No. of questions | Pre-Training Accuracy | Post-Training Accuracy | Margin of Improvement |

| 2024 | 30 | 26.7 % | 36.7 % | +10.0pt |

| 2025 | 30 | 33.3 % | 43.3 % | +10.0pt |

Using data that includes complex calculation steps not only improved accuracy in mathematical reasoning tasks but also helped prevent breakdowns in step-by-step calculations.

This dataset is available on Hugging Face (see below)

https://huggingface.co/datasets/APTOinc/llm-math-reasoning-dataset

For our existing clients, this will be delivered shortly via our email newsletter.

Future Dataset Development

In areas that require logical reasoning, it's important not only to reach the right answer but also to clearly understand and reproduce the steps that lead there. As a result, approaches that emphasize the quality of the reasoning process itself are gaining attention. (*4)

Because LLM technology is advancing at a rapid pace, we believe it is necessary to develop datasets for other fields as well, datasets that enable models to accurately follow step-by-step processes, while keeping in mind constantly shifting needs and technical challenges.

We are developing new datasets in line with future technology trends and customer needs. We hope these will help accelerate your AI development and further improve accuracy.

| Sources: |

| *4: The Lessons of Developing Process Reward Models in Mathematical Reasoning (2025), https://arxiv.org/abs/2410.17621 |

About APTO

We provide AI development support services that focus on data, the factor that has the greatest impact on accuracy in any AI development. Our offerings include:

- harBest, a data collection and annotation platform that uses crowd workers;

- harBest Dataset, which accelerates the preparation of data often considered a bottleneck in the early stages of development;

- harBest Expert, which improves data accuracy by incorporating expert knowledge.

By supporting AI development that might be restricted due to data-related challenges, we have earned recognition from many enterprises both in Japan and abroad.

TOKYO, Oct. 1, 2025 /PRNewswire/ -- As generative AI use continues to increase, accuracy has become the most important metric and a key factor in decisions around adoption and utilization. APTO is committed to supporting companies and organizations through high-quality AI data.

In recent years, the performance of LLMs has improved dramatically. However, in mathematical tasks that require multi-step calculations or strict answer formats, errors and formatting issues are still frequently observed. To address these challenges, we have developed and released a training dataset for LLMs designed to enhance reasoning and answer accuracy in mathematical problem-solving.

Background: Developing an LLM Dataset for Mathematical Reasoning

LLM developers and users have no doubt encountered the following challenges when dealing with mathematics:

- The model does not output step-by-step calculations

- It fails to follow the calculation process accurately and produces an incorrect answer

- Responses not conforming to the required answer format, such as integers or fractions

- Responses that do not show the problem-solving process (for example, omitting intermediate steps or outputting only the final answer)

In tackling complex mathematical problems, it is not uncommon to encounter outputs that disregard instructions or rules and fail to provide accurate answers.

We drew on our experience enhancing the reasoning abilities of LLMs to improve the accuracy of answers to mathematical problems. Based on this expertise, we developed a dataset of mathematical problems that includes complex reasoning processes.

About This Dataset

This dataset is mathematical reasoning data in JSON Lines format, created through a combination of machine generation and human review. It is designed for training PRMs (Process/Preference Reward Models) and includes not only the problem statement, correct answer, and generated responses, but also the reasoning process (Chain-of-Thought) and evaluation information for each step. This enables qualitative assessment of the reasoning process, rather than just a simple right-or-wrong judgment.

What Is Included in the Data Set?

- problem (mathematical problems to be input)

- expected_answer (used for automatic grading, evaluation, and format checking)

- generated_answer (used for analyzing error patterns and extracting difficult cases)

- answer_match (used for difficulty adjustment, stratified evaluation, and sampling control

- step_evaluations: array; each element consists of {step_index, step_text, verdict} (using the text of each step and its correctness label to perform PRM/process supervision)

- metadata.step_evaluation_category (eg: all_correct/partial_correct)

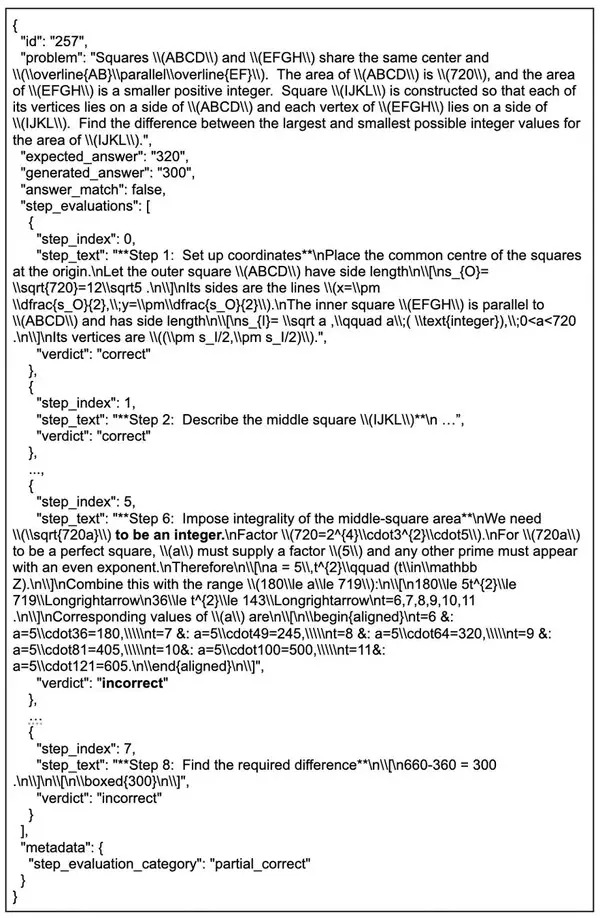

An Example of the Reasoning Process Breaking Down Midway

In this example, some of the geometric constraints are overlooked, and the range is limited only by the number-theoretic condition that "the area must be an integer." As a result, configurations that should not be allowed are included, leading to the incorrect conclusion of a difference of 300. On the other hand, since the initial derivation is correct but the reasoning collapses at the end, this is categorized as partial_correct, a typical failure pattern.

(This is an example of an incorrect answer and constitutes only a part of the published dataset.)

We ensure quality by combining automated evaluation with human checks, covering both format compliance and the accuracy of final answers. The dataset we're releasing includes 300 samples taken from the data used in training.

The Types of Questions

In order to prevent the reasoning process being skewed toward specific domains, we have divided the questions into the following categories:

The Reasoning Process

'Reasoning Process (Chain-of-Thought)' refers to the step-by-step thinking involved in solving a mathematical problem.

The dataset structures this process into an appropriate sequence: reading the problem, carrying out calculations step by step, and arriving at the answer. Each problem includes at least two reasoning steps, with solution processes ranging from two to eight steps.

Data Performance Evaluation Results

A model trained on this dataset was evaluated for performance using the following AIME problem set as an external benchmark:

- 2024 AIME (HuggingFaceH4/aime_2024) (*1)

- 2025 AIME (math-ai/aime25) (*2)

The evaluation procedure is outlined below:

1. Fine-tuning using appropriate reasoning data for mathematical problem solving as training data.

This fine-tuning is a multitask approach that combines PRM (Process Reward Model) and CLM (Causal Language Modeling).

In the PRM task, we used step_evaluations.step_text from the dataset as input, and the verdict labels ("correct / incorrect / unclear") as the training signal to classify whether each step was right or wrong. At the same time, we combined this with CLM (next-token prediction) to help the model maintain its ability to generate text. For training, we applied LoRA, which allowed us to fine-tune efficiently while keeping most of the base model frozen.

2. Evaluated answer accuracy on the AIME dataset before and after training. Since outputs can vary, each problem was answered four times, and the average score was compared.

To evaluate improvements in answer accuracy, we conducted evaluations on openai/gpt-oss-20b (*3), a model with strong mathematical performance.

When training was conducted on the above models, we observed an actual improvement of 10.0 points in answer performance.

Exam Year

No. of questions

Pre-Training Accuracy

Post-Training Accuracy

Margin of Improvement

2024

30

26.7 %

36.7 %

+10.0pt

2025

30

33.3 %

43.3 %

+10.0pt

Using data that includes complex calculation steps not only improved accuracy in mathematical reasoning tasks but also helped prevent breakdowns in step-by-step calculations.

This dataset is available on Hugging Face (see below)

https://huggingface.co/datasets/APTOinc/llm-math-reasoning-dataset

For our existing clients, this will be delivered shortly via our email newsletter.

Future Dataset Development

In areas that require logical reasoning, it's important not only to reach the right answer but also to clearly understand and reproduce the steps that lead there. As a result, approaches that emphasize the quality of the reasoning process itself are gaining attention. (*4)

Because LLM technology is advancing at a rapid pace, we believe it is necessary to develop datasets for other fields as well, datasets that enable models to accurately follow step-by-step processes, while keeping in mind constantly shifting needs and technical challenges.

We are developing new datasets in line with future technology trends and customer needs. We hope these will help accelerate your AI development and further improve accuracy.

| Sources: |

| *4: The Lessons of Developing Process Reward Models in Mathematical Reasoning (2025), https://arxiv.org/abs/2410.17621 |

Sources:

*1: https://huggingface.co/datasets/HuggingFaceH4/aime_2024

*2: https://huggingface.co/datasets/math-ai/aime25

*3: https://huggingface.co/openai/gpt-oss-20b

*4: The Lessons of Developing Process Reward Models in Mathematical Reasoning (2025), https://arxiv.org/abs/2410.17621

About APTO

We provide AI development support services that focus on data, the factor that has the greatest impact on accuracy in any AI development. Our offerings include:

- harBest, a data collection and annotation platform that uses crowd workers;

- harBest Dataset, which accelerates the preparation of data often considered a bottleneck in the early stages of development;

- harBest Expert, which improves data accuracy by incorporating expert knowledge.

By supporting AI development that might be restricted due to data-related challenges, we have earned recognition from many enterprises both in Japan and abroad.

** The press release content is from PR Newswire. Bastille Post is not involved in its creation. **

APTO Releases Training Dataset to Enhance the Mathematical Reasoning Capabilities of Large Language Models (LLMs)

APTO Releases Training Dataset to Enhance the Mathematical Reasoning Capabilities of Large Language Models (LLMs)