BEIJING, Oct. 6, 2025 /PRNewswire/ -- In 2025, "Agent" is undoubtedly a buzzword in the AI community. It is widely believed that truly useful Agents must learn to use mobile phones and computers, and interact with GUI (Graphical User Interface) just like humans.

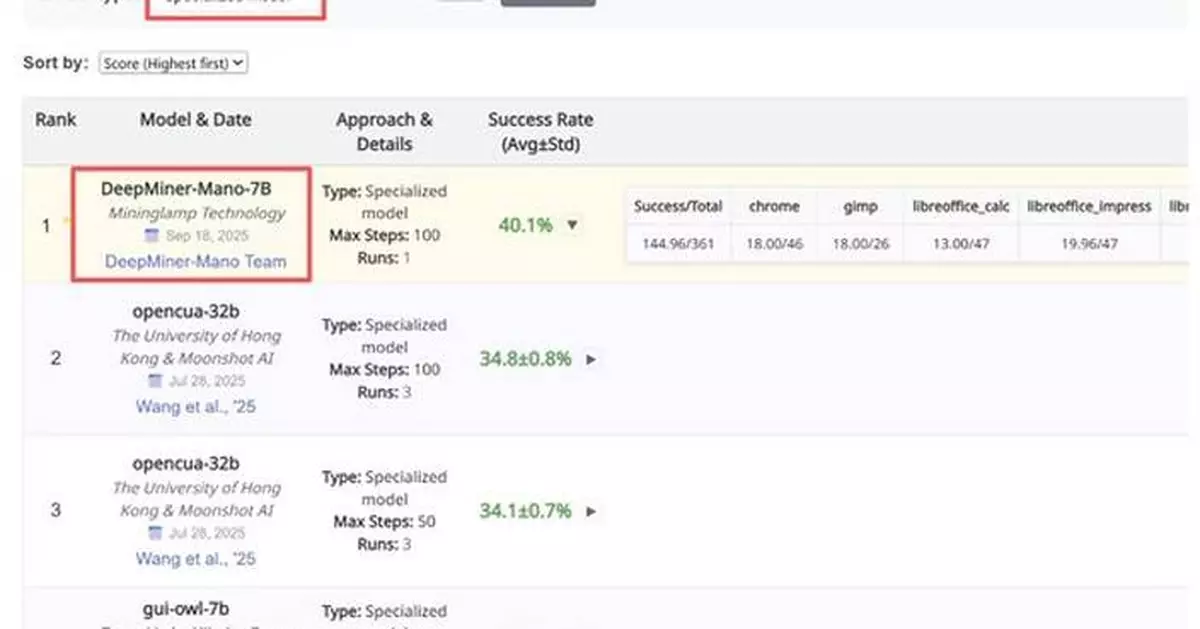

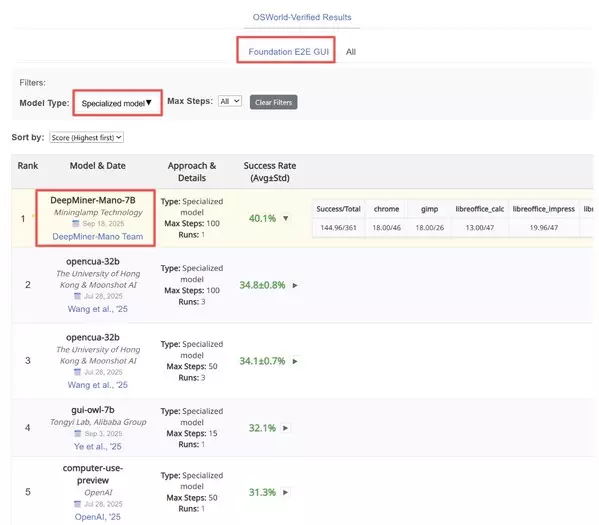

Recently, MiningLamp Technology—the leading Chinese enterprise in enterprise-level large models and data intelligence—announced that its specialized GUI Large Model Mano achieved record-breaking SOTA (State of the Art) performance on two industry-recognized benchmark tests: Mind2Web and OSWorld. Through two core innovations—online reinforcement learning and automated training data acquisition—Mano establishes a scalable and self-evolving paradigm for GUI agent development.

Ranking list link: https://os-world.github.io/

Technical report link: https://www.mininglamp.com/news/6394/

Key Breakthroughs:

The technical report reveals that in the Foundation E2E GUI & Specialized Model evaluation on the OSWorld-Verified leaderboard, Mano directly boosted the success rate to 41.6 ± 0.7%, surpassing models such as Qwen, GUI-Owl, and OpenCua.

Technical Innovations:

Highlight One: First Proposal of "Online Reinforcement Learning"

Since the emergence of DeepSeek, GRPO has become the gold standard in reinforcement learning. Currently, most model training is still confined to the realm of offline reinforcement learning, relying on pre-collected datasets. However, in the field of GUI-based interactive agents, every operation is closely related to the real system interaction environment.

Therefore, Mano first proposed the "online reinforcement learning" training paradigm in the field of GUI interaction, and launched an "explorer" for automated training data acquisition, which enables the agent to continuously learn from the up-to-date information, keeping a dynamic balance between "trying new actions to gain insights" and "executing optimal moves based on existing knowledge."

To continuously enhance adaptability and flexibility in real-world interaction scenarios, MiningLamp Technology established a simulation environment pool, encompassing Browser Use Agent (BUA) and Computer Use Agent (CUA) environments, enabling models to gather diverse environmental data through real-world interactions. This approach addresses the limitations of sparse distribution of offline trajectories, ultimately demonstrating greater robustness across a wide range of Web GUI scenarios.

Meanwhile, MiningLamp Technology employs an innovative approach—online sampling + offline filtering. First, collecting trajectories, and then filtering out noisy data. This method dynamically adjusts the distribution of task difficulty, effectively preventing learning inefficiency caused by failed trajectories.

Ablation study results show that after adding online reinforcement learning, the model achieved a significant improvement in its average score on the OSWorld-Verified dataset, surpassing the performance of the offline reinforcement learning model by 7.9 points, reaching a total of 41.6.

Highlight Two: Intelligent Exploration, Capturing Real-World Environmental Trajectories

Although large models can understand broad instructions, they often struggle to break down complex, goal-driven tasks requiring multiple steps into specific execution steps. As a result, developers need to develop specialized models and agents for interactive tasks. This process requires massive volumes of high-quality interactive trajectory data. Historically, such data has typically required manual construction or annotation, which was both costly and time-consuming. To address this challenge, MiningLamp Technology has designed an automated method for collecting training data, fundamentally boosting both the efficiency and accuracy of data acquisition. This is precisely Mano's second major innovation.

MiningLamp Technology has built a scalable virtual environment cluster designed to simulate a variety of interactive scenarios. For each target application, the large model automatically generates a target list, prioritizes these targets, filters out functions with extremely low usage frequency, and provides clear contextual guidance for subsequent exploration.

In terms of element extraction, MiningLamp Technology has customized a Chrome plugin called "Mano-C" specifically for web environments, comprehensively extracting interactive elements and capturing their spatial coordinates and semantic attributes. For desktop environments, the technical team employs a combined approach using A11y Tree parsing and OmniParseV2 collaborative filtering, ensuring broader coverage of interactive elements.

In terms of data annotation, MiningLamp Technology has generated semantic labels, functional descriptions, and interaction categories for each extracted element by large models, thereby forming structured semantically aligned data that provides effective supervision for subsequent training.

To enhance the intelligence of data collection, the technical team designed a Prompt-based exploration module that intelligently selects interactive elements, and introduced explicit constraints to prevent path loops and redundant branches. During the exploration process, a depth-first search (DFS) strategy is employed. The system captures screenshots and saves annotated interaction data. Once exploration is completed, filtering out high-quality interaction sequences by trajectory evaluation mechanism. The entire process runs in a continuous loop, checking at each step whether it reaches the maximum exploration depth.

Mano's state-of-the-art (SOTA) performance is attributed to MiningLamp Technology's years of accumulation in large models. In 2024, MiningLamp Technology's hypergraph multimodal large language model (HMLLM) and Video-SME dataset made significant breakthroughs in non-standard modality data processing (e.g., EEG, eye-tracking), recognized by ACM MM 2024 Best Paper Nomination.In 2025, MiningLamp Technology launched DeepMiner, a trustworthy intelligent agent for business data analysis .As DeepMiner's automated execution engine, Mano has enabled the agent to truly learn to "see" and "click," achieving precise operations in complex software and browser environments. Looking ahead, MiningLamp Technology will further optimize Mano's capabilities for application and edge-side deployment, accelerating the pace of enterprise intelligent transformation.

** The press release content is from PR Newswire. Bastille Post is not involved in its creation. **

Global SOTA on Dual Benchmarks! MiningLamp Technology's Specialized GUI Model Mano Unveils New Era of Intelligent GUI Operation