TOKYO--(BUSINESS WIRE)--Jan 21, 2026--

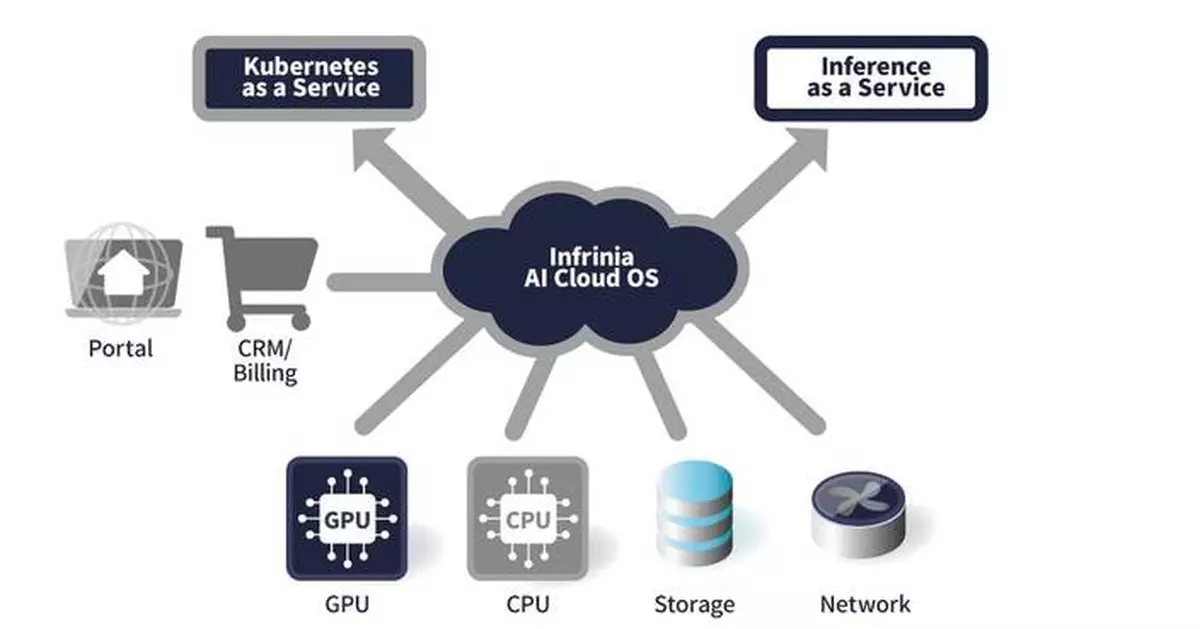

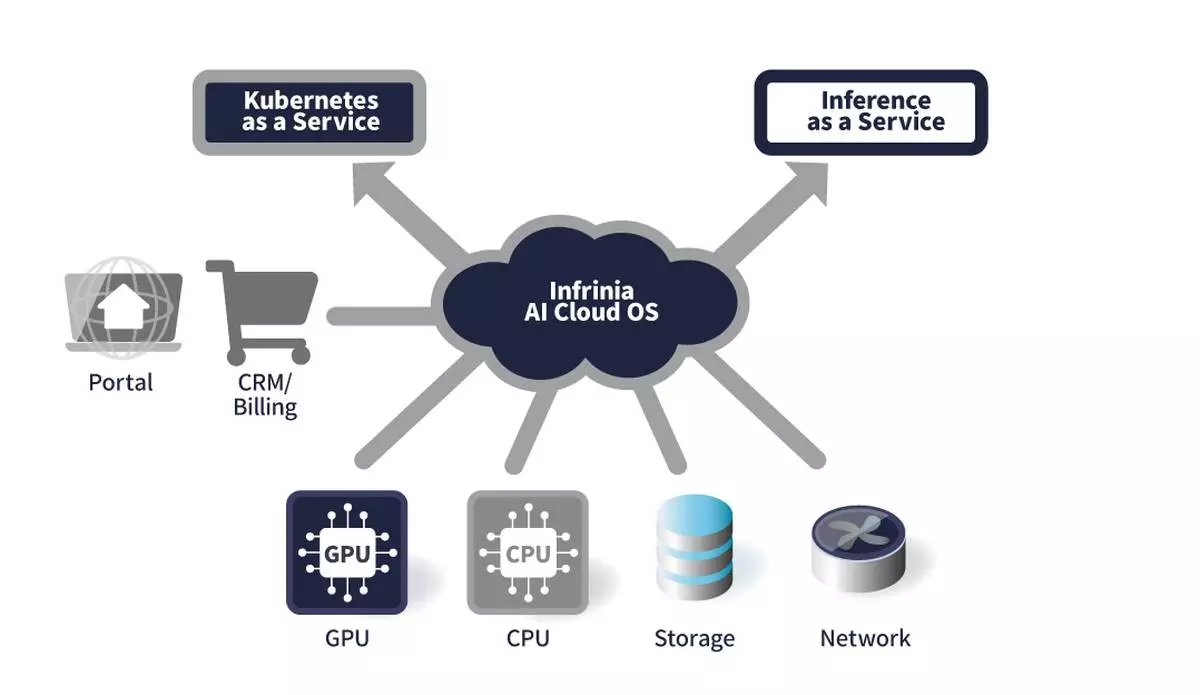

SoftBank Corp. (TOKYO:9434, President & CEO: Junichi Miyakawa, “SoftBank”) announced that its Infrinia Team* 1, which works on the development of next-generation AI infrastructure architecture and systems, has developed “Infrinia AI Cloud OS,” a software stack* 2 designed for AI data centers.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20260120958817/en/

By deploying “Infrinia AI Cloud OS,” AI data center operators can build Kubernetes* 3 as a Service (KaaS) in a multi-tenant environment, and Inference as a Service (Inf-aaS) that provides Large Language Model inference capabilities via APIs, as part of their own GPU cloud services. In addition, the software stack is expected to reduce total cost of ownership (TCO) as well as operational burden compared with bespoke solutions or in-house development. This will enable the rapid delivery of GPU cloud services that efficiently and flexibly support the full AI lifecycle—from AI model training to inference.

SoftBank plans to deploy “Infrinia AI Cloud OS” initially within its own GPU cloud services. Furthermore, the Infrinia Team aims to expand deployment to overseas data centers and cloud environments with a view to global adoption.

Background of “Infrinia AI Cloud OS” Development

The demand for GPU-accelerated AI computing is expanding rapidly across the generative AI, autonomous robotics, simulation, drug discovery, and materials development fields. As a result, user needs and usage patterns for AI computing are becoming increasingly diverse and sophisticated, and requirements including the following have emerged:

Building and operating GPU cloud services that meet these requirements requires highly specialized expertise and involves complex operational tasks, placing a significant burden on GPU cloud service providers.

To address these challenges, the Infrinia Team developed “Infrinia AI Cloud OS,” a software stack that maximizes GPU performance while enabling the easy and rapid deployment and operation of advanced GPU cloud services.

Key Features of “Infrinia AI Cloud OS”

Kubernetes as a Service

Inference as a Service

Secure Multi-tenancy and High Operability

These key features allow AI data center operators with customer management systems, as well as enterprises offering GPU cloud services, to add advanced capabilities that enable efficient AI model training and inference while flexibly utilizing GPU resources, to their own GPU service offerings.

Junichi Miyakawa, President & CEO of SoftBank Corp., commented:

"To further deepen the utilization of AI as it evolves toward AI agents and Physical AI, SoftBank is launching a new GPU cloud service and software business to provide the essential capabilities required for the large-scale deployment of AI in society. At the core of this initiative is our in-house developed ‘Infrinia AI Cloud OS,’ a GPU cloud platform software designed for next-generation AI infrastructure that seamlessly connects AI data centers, enterprises, service providers and developers. The advancement of AI infrastructure requires not only physical components such as GPU servers and storage, but also software that integrates these resources and enables them to be delivered flexibly and at scale. Through Infrinia, SoftBank will play a central role in building the cloud foundation for the AI era and delivering sustainable value to society.”

For more information on “Infrinia AI Cloud OS,” please visit the website below:

https://infrinia.ai/

About SoftBank Corp.

Guided by the SoftBank Group’s corporate philosophy, “Information Revolution – Happiness for everyone,” SoftBank Corp. (TOKYO: 9434) operates telecommunications and IT businesses in Japan and globally. Building on its strong business foundation, SoftBank Corp. is expanding into non-telecom fields in line with its “Beyond Carrier” growth strategy while further growing its telecom business by harnessing the power of 5G/6G, IoT, Digital Twin and Non-Terrestrial Network (NTN) solutions, including High Altitude Platform Station (HAPS)-based stratospheric telecommunications. While constructing AI data centers and developing homegrown LLMs specialized for the Japanese language, SoftBank is integrating AI with radio access networks (AI-RAN), with the aim of becoming a provider of next-generation social infrastructure. To learn more, please visit https://www.softbank.jp/en/corp/

*1: The Infrinia Team is an Infrastructure Architecture & Systems Team established within SB Telecom America, Corp., a wholly owned subsidiary of SoftBank Corp., as part of the company’s broader initiative to advance next-generation AI infrastructure. The Infrinia Team is based in Sunnyvale, California USA.

*2: A software stack is a set of software components and functions used together to build and operate systems and applications.

*3: Kubernetes is an open-source system for automating the deployment and scaling of applications and for managing containerized applications.

Image of "Infrinia AI Cloud OS"