Dr. Sara HUANG, Chief Mainland Affairs Officer of the Hong Kong University of Science and Technology (HKUST), Chief Operating Officer of the Hong Kong Generative AI Research and Development Center (HKGAI), and Postdoctoral Researcher in AI Media at HKUST, attended the "Cyber Security Forum" organized by the Digital Policy Office of the HKSAR Government yesterday (January 20). In response to the evolving security landscape brought by Generative AI, Dr. HUANG shared HKGAI's practical R&D experience at the forum. She emphasized that AI security must progress from simply control to proactive prediction, employing rigorous engineering methods to ensure AI behavior is “visible, traceable, and calculable".

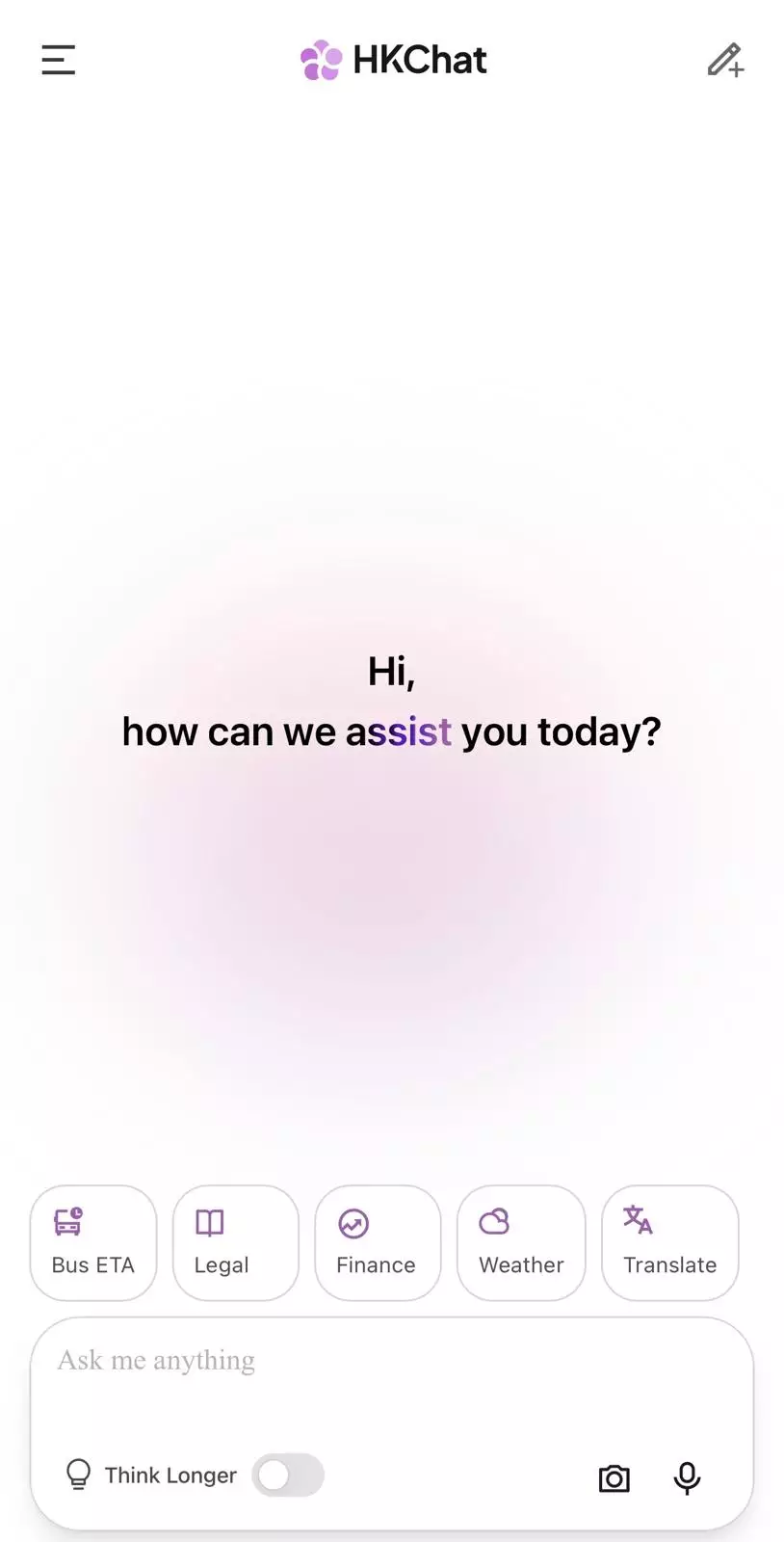

Dr. HUANG began by introducing the development of HKChat (港話通), a local AI assistant developed by HKGAI. Since its official launch on November 20 last year, HKChat has attracted more than 630,000 registered users in just two months. Responding to a vast array of daily localized inquiries—ranging from “Where can I find the best barbecued pork buns?” to “How do I get from HKUST to the Central Government Offices for a meeting?”—Dr. HUANG noted that this presents both “pressure and motivation”. She pointed out that users are not looking for generic answers, but for responses that are smarter, more timely, and precisely align with the Hong Kong context. Regarding public concerns about Al security, Dr. HUANG stressed the goal of keeping potential risks within a ‘visible and acceptable' range.

Bridging the Security Gap: Generative AI vs. Traditional Software

Dr. HUANG highlighted the fundamental differences between Generative AI and traditional software. "Traditional software executes based on fixed rules, whereas Generative AI generates content based on data and context. Therefore, risk is not confined to the system itself but extends throughout the entire process from data input to output."

She added that many AI security efforts today remain at the stage of “patching vulnerabilities". As the technology is increasingly applied in public services and legal consultations, passive defense can no longer meet operational demands. Proactive prediction, she emphasized, is essential to overcoming security challenges.

Dr. Sara HUANG (center) attended the Cyber Security Forum organized by the Digital Policy Office of the HKSAR Government yesterday (January 20).

Building Foundational Capabilities for Proactive Prediction

Speaking about HKGAI's practical experience, Dr. HUANG stressed that the prerequisite for proactive prediction is a solid foundational capability. “AI security risks are often difficult to anticipate, not because of insufficient algorithms, but because the system cannot ‘see' or ‘calculate' clearly.” In its product development, HKGAI first focused on completing the collection of logs, call chains, and full-process input-output data to ensure that model behavior is traceable and monitorable. It has developed its own evaluation framework to build an analyzable, reviewable safety data system, providing the data backbone for proactive prediction.

Addressing issues faced by Generative AI, including data risks, linguistic induction, and hallucinations, Dr. HUANG introduced HKGAI's layered defense strategy:

• Data Source Control: Constructing local, traceable knowledge bases. For instance, legal answers in “LexiHK” (港法通) are strictly based on official Hong Kong statutes and precedents, with mandatory source citations;

• System-layer Defense: Implementing input restrictions, prompt audits, and security rewriting mechanisms to resist “jailbreak” inductions;

• Output Refinement: Utilizing RAG (Retrieval-Augmented Generation), Agentic Search, and output verification to constrain model behavior and reduce hallucination risks.

Three Strategic Priorities to Enable Proactive Prediction

Looking ahead to the next 6–12 months, Dr. HUANG identified the lack of foundational infrastructure as the primary obstacle to advancing from passive defense to proactive prediction. “If logs are inconsistent and we cannot understand how an anomalous output was generated, early warning becomes impossible.” She recommended that the industry prioritize three areas:

1. Enhance Observability: Enhance logs and call chains to ensure model behavior is traceable from start to finish.

2. Enable Data-Driven Evaluation: Address data fragmentation and upgrade evaluations from manual spot-checks to data-driven, quantifiable systems.

3. Cultivate Hybrid Teams: Build teams that understand models, data, and security in tandem, integrating security considerations directly into the design phase.

Call for User Co-creation: Feedback Drives Iteration

Dr. HUANG highlighted the importance of a “repair mechanism”, encouraging users to provide instant feedback if they notice outdated or incorrect information while using HKChat. She noted that corrections from real users are the most valuable nutrients for model optimization, and HKGAI looks forward to working with all Hong Kong citizens to refine this homegrown model.

Dr. HUANG stated that Al innovative applications of generative AI and robust security protection are dialectically unified. HKGAI remains committed to the principles of making AI “visible, traceable, and calculable", while cultivating hybrid talents who “understand models, data, and security”. The Center will continue to explore a proactive, prediction-based AI security framework, with the goal of building a strong security shield for the high-quality development of Hong Kong's Al industry and supporting the safe deployment of AI technologies across more sectors.