|

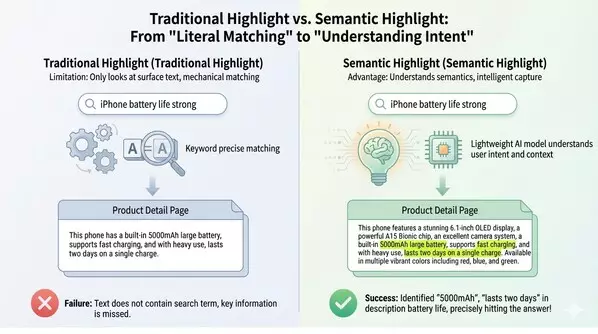

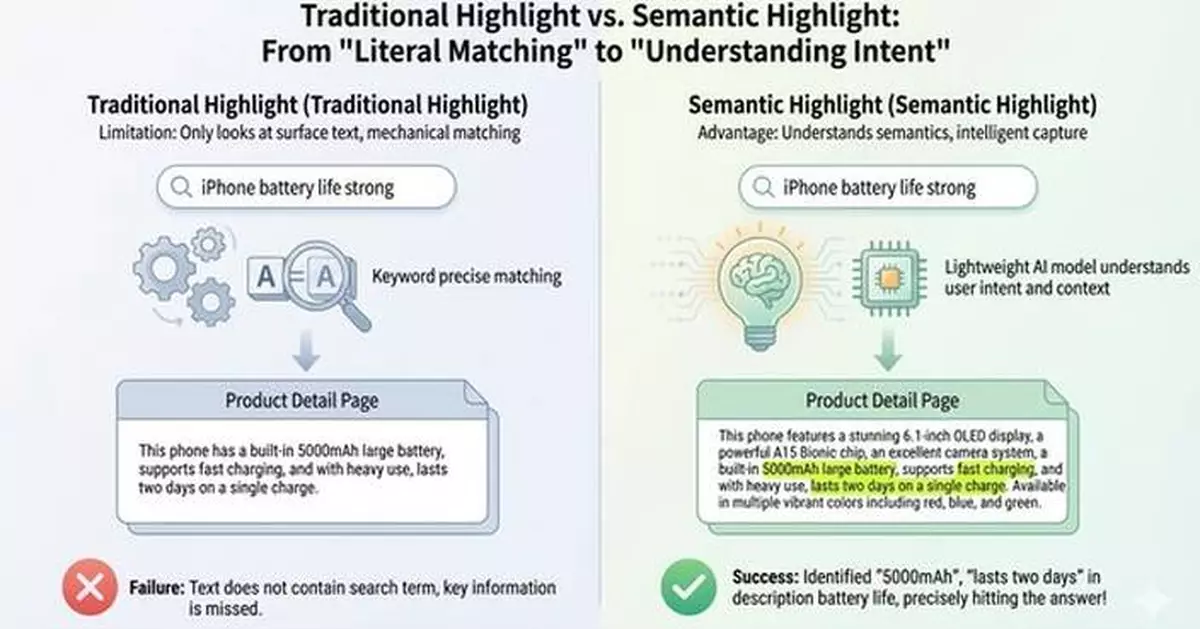

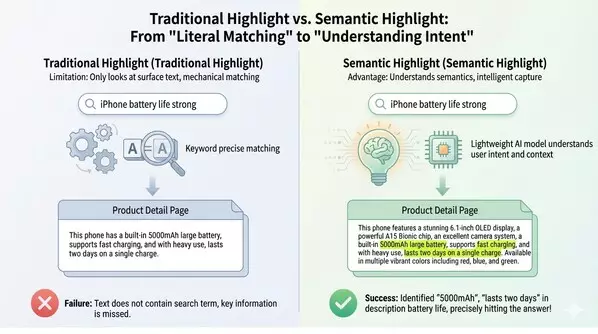

REDWOOD CITY, Calif., Jan. 31, 2026 /PRNewswire/ -- Zilliz, the company behind the leading open-source vector database Milvus, today announced the open-source release of its Bilingual Semantic Highlighting Model, an industry-first AI model designed to dramatically reduce token usage and improve answer quality in production RAG-powered AI applications.

This highlighting model introduces sentence-level relevance filtering, enabling AI developers to remove low-signal context before sending prompts to large language models. This approach directly addresses rising inference costs and accuracy issues caused by oversized context windows in enterprise RAG and RAG-powered AI deployments.

"As RAG systems move into production, teams are running into very real cost and quality limits," said James Luan, VP of Engineering at Zilliz. "This model gives developers a practical way to reduce prompt size and improve answer accuracy without reworking their existing pipelines."

Key Innovations and Technical Breakthroughs

- Bilingual relevance by design: Optimized for both English and Chinese, the model addresses cross-lingual relevance challenges common in global RAG deployments. It is built on the MiniCPM-2B architecture, enabling low-latency, production-ready performance.

- Sentence-level context filtering: Rather than scoring entire document chunks, the model evaluates relevance at the sentence level and retains only content that directly supports a user query before sending it to the LLM.

- Lower token usage, higher answer quality: Zilliz reports that sentence-level filtering significantly compresses prompt size while improving downstream response quality, helping teams reduce inference costs and improve generation speed in production environments.

Availability

The Bilingual Semantic Highlighting Model is available today as an open-source release. To learn more about the training methodology and performance benchmarks, visit the Zilliz Technical Blog.

Download: : zilliz/semantic-highlight-bilingual-v1

About Zilliz

Zilliz is the company behind Milvus, the world's most widely adopted open-source vector database. Zilliz Cloud brings that performance to production with a fully managed, cloud-native platform built for scalable, low-latency vector search and hybrid retrieval. It supports billion-scale workloads with sub-10ms latency, auto-scaling, and optimized indexes for GenAI use cases like semantic search and RAG.

Zilliz is built to make AI not just possible—but practical. With a focus on performance and cost-efficiency, it helps engineering teams move from prototype to production without overprovisioning or complex infrastructure. Over 10,000 organizations worldwide rely on Zilliz to build intelligent applications at scale.

Headquartered in Redwood Shores, California, Zilliz is backed by leading investors, including Aramco's Prosperity 7 Ventures, Temasek's Pavilion Capital, Hillhouse Capital, 5Y Capital, Yunqi Partners, Trustbridge Partners, and others. Learn more at Zilliz.com.

REDWOOD CITY, Calif., Jan. 31, 2026 /PRNewswire/ -- Zilliz, the company behind the leading open-source vector database Milvus, today announced the open-source release of its Bilingual Semantic Highlighting Model, an industry-first AI model designed to dramatically reduce token usage and improve answer quality in production RAG-powered AI applications.

This highlighting model introduces sentence-level relevance filtering, enabling AI developers to remove low-signal context before sending prompts to large language models. This approach directly addresses rising inference costs and accuracy issues caused by oversized context windows in enterprise RAG and RAG-powered AI deployments.

"As RAG systems move into production, teams are running into very real cost and quality limits," said James Luan, VP of Engineering at Zilliz. "This model gives developers a practical way to reduce prompt size and improve answer accuracy without reworking their existing pipelines."

Key Innovations and Technical Breakthroughs

- Bilingual relevance by design: Optimized for both English and Chinese, the model addresses cross-lingual relevance challenges common in global RAG deployments. It is built on the MiniCPM-2B architecture, enabling low-latency, production-ready performance.

- Sentence-level context filtering: Rather than scoring entire document chunks, the model evaluates relevance at the sentence level and retains only content that directly supports a user query before sending it to the LLM.

- Lower token usage, higher answer quality: Zilliz reports that sentence-level filtering significantly compresses prompt size while improving downstream response quality, helping teams reduce inference costs and improve generation speed in production environments.

Availability

The Bilingual Semantic Highlighting Model is available today as an open-source release. To learn more about the training methodology and performance benchmarks, visit the Zilliz Technical Blog.

Download: : zilliz/semantic-highlight-bilingual-v1

About Zilliz

Zilliz is the company behind Milvus, the world's most widely adopted open-source vector database. Zilliz Cloud brings that performance to production with a fully managed, cloud-native platform built for scalable, low-latency vector search and hybrid retrieval. It supports billion-scale workloads with sub-10ms latency, auto-scaling, and optimized indexes for GenAI use cases like semantic search and RAG.

Zilliz is built to make AI not just possible—but practical. With a focus on performance and cost-efficiency, it helps engineering teams move from prototype to production without overprovisioning or complex infrastructure. Over 10,000 organizations worldwide rely on Zilliz to build intelligent applications at scale.

Headquartered in Redwood Shores, California, Zilliz is backed by leading investors, including Aramco's Prosperity 7 Ventures, Temasek's Pavilion Capital, Hillhouse Capital, 5Y Capital, Yunqi Partners, Trustbridge Partners, and others. Learn more at Zilliz.com.

** The press release content is from PR Newswire. Bastille Post is not involved in its creation. **

Zilliz Open Sources Industry-First Bilingual "Semantic Highlighting" Model to Slash RAG Token Costs and Boost Accuracy

SINGAPORE, Jan. 31, 2026 /PRNewswire/ -- Photo Dance, a globally leading AI photo dance application, today announced a major product upgrade with the introduction of its new feature, Magic AI Video.

Magic AI Video is a groundbreaking motion-transfer feature that seamlessly blends a user-uploaded static image with the movements and facial expressions from a reference video, producing highly fluid and lifelike dynamic videos. Powered by advanced temporal consistency algorithms, the generated content maintains smooth continuity from frame to frame, completely eliminating frame drops and motion artifacts. This technology lowers the barrier to professional-grade motion capture to zero—any user can bring a person in a single photo to life, perfectly on beat and vividly expressive. With an exceptionally low creation threshold and strong shareability, it is inherently designed for viral growth.

Photo Dance is an AI-powered photo dance app developed by SPARKHUB PTE. LTD. Since its launch, Photo Dance has achieved more than 1.2 million downloads worldwide, with users generating over 1.8 million AI dance videos across global markets.

As conversations around "AI photo dance" continue to gain momentum on social media platforms, Photo Dance's continuous innovation and product upgrades are helping to redefine the category. Through measurable performance data, real-world use cases, and a long-term commitment to technological advancement, Photo Dance is setting new standards for creativity and accessibility in AI-generated video content.

What is Photo Dance? What Problem Does It Solve?

Photo Dance is an AI-powered photo-to-dance video application whose core function is transforming any static photograph into a dancing video.

Unlike traditional dance content creation, it doesn't require users to film videos or dance themselves. Instead, through AI motion transfer technology, Photo Dance brings people, babies, pets, or virtual characters in photos to life with dance movements. For users who prefer not to appear on camera, lack dancing skills, or simply want to effortlessly participate in short-form video trends, Photo Dance offers a low-barrier entry point into dance culture.

Is Photo Dance Easy to Use? Real User Experience

From a user experience standpoint, Photo Dance's workflow is designed to be straightforward:

Select template → Choose photo → Generate video. The entire process requires no professional editing or AI operation experience.

Photo Dance offers 500+ dance templates covering trending TikTok choreography and supports continuous updates. Videos are typically generated within 3 minutes and can be directly shared on TikTok, Instagram, YouTube, and other platforms.

To date, Photo Dance has accumulated:

- 1.2 million downloads globally

- 4.4-star App Store rating

- 1.8+ million videos generated

Users consistently highlight keywords like "fun," "quality exceeded expectations," "fast rendering speed," and "simple to operate" in their reviews.

How is Photo Dance Different from Other Photo Dance Apps?

In common user comparisons, Photo Dance is frequently discussed alongside other tools. Photo Dance distinguishes itself on three key points:

- Template Quantity & Update Frequency: Covers more trending TikTok choreography

- Generation Quality & Stability: Reduces motion distortion and unnatural movements

- Creation Efficiency: No lengthy waiting times or complex setup – simple workflow for quick creation

This makes Photo Dance ideal for ordinary users to rapidly produce and share "viral-worthy content," rather than positioning it as a specialized or experimental AI tool.

What Scenarios Can Photo Dance Be Used For?

Based on user behavior patterns, Photo Dance's applications extend beyond entertainment:

- Baby Dance Videos: One of the most popular content types among family users

- Pet Dancing & Funny Expressions: Perfect for lighthearted social sharing

- Anime & Character Dancing: Used for fan-created content and virtual character videos

- Old Photo Animation: Bringing precious memories "back to life"

The last category, in particular, has sparked discussions about "appropriateness" and "boundaries" among some users.

On Controversy: Beyond Technology, Usage Matters Most

Regarding discussions about "whether old photos or photos of deceased loved ones should dance," the Photo Dance team hasn't shied away from the topic. They emphasize that the technology itself carries no preset emotional stance – what truly matters is the user's intention and method of use.

Many users choose to create memorial videos, family memories, or personal emotional content rather than public content. The Photo Dance team encourages users to employ these features with respect, goodwill, and personal judgment.

Photo Dance's recently launched Magic AI Video feature further optimizes motion control and naturalness, producing more restrained and authentic results that reduce exaggerated or inappropriate expressions.

Safety & Trustworthiness: One of Users' Top Concerns

In AI applications, "is it safe? Is it trustworthy?" remains a focus of user inquiries. Photo Dance is currently available exclusively on iOS, adheres to app store privacy and subscription regulations, and clearly discloses subscription and auto-renewal mechanisms. The team has also stated they will continue optimizing product transparency and user experience.

Photo Dance: An AI Creation Tool Built for Short-Form Video

Photo Dance doesn't position itself as an "all-in-one AI video tool," but rather focuses on a clear objective: enabling ordinary users to create and express themselves on short-form video platforms at minimal cost.

As AI continues to lower barriers to content creation, Photo Dance represents a broader trend – shifting from "Can I make videos?" to "Do I want to express myself?"

About Photo Dance

Photo Dance is an AI photo dance application developed by SPARKHUB PTE. LTD., enabling users to transform any photograph into a dance video suitable for TikTok, Instagram, YouTube, and other video platforms. Photo Dance targets users aged 25–45, delivering a lightweight, entertaining, and shareable creation experience.

Product Overview:

About SPARKHUB PTE. LTD.

Founded on May 16, 2024, and headquartered in Singapore, SPARKHUB PTE. LTD. is a team with deep expertise in the mobile social space. Our members are energetic and passionate about creating fun experiences. We are committed to developing innovative mobile apps that connect users with millions of people nearby and around the world.

Company Overview:

- Company Name: SPARKHUB PTE. LTD.

- Address: 10 ANSON ROAD #12-08 INTERNATIONAL PLAZA SINGAPORE (079903)

- Official Website: https://sparkhubcorp.com/

Contact Information:

** The press release content is from PR Newswire. Bastille Post is not involved in its creation. **

Photo Dance: Making AI Dance Trends Accessible to Everyone - How This App Lets Ordinary Users Join the Dance Wave Without Appearing On Camera