Turing Award winner Leslie Valiant has cautioned that AI-powered large language models (LLMs), while appearing intelligent, are a far cry from possessing human-like thinking capabilities.

Valiant, a celebrated British computer scientist, earned the Association for Computing Machinery's 2010 A.M. Turing Award for his work that helped lay the foundation for machine learning.

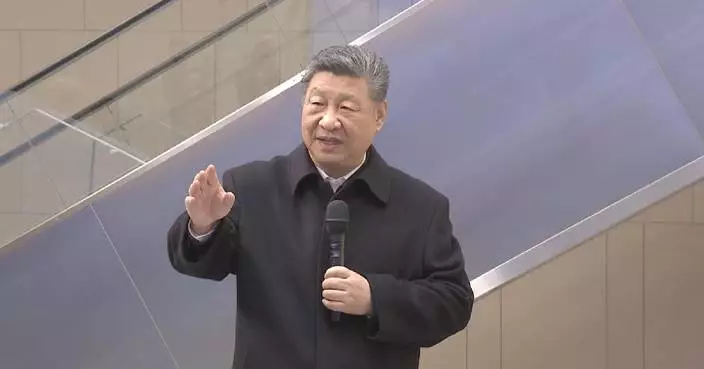

In an interview with China Media Group (CMG), Valiant acknowledged that breakthroughs in AI have produced fascinating results, but warned that observers should be objective in their analysis of what the technology is capable of.

"I think the big difference of these large language models is the fact that they can produce very smooth language in English or Chinese. Humans find it stunning. Intuitively, without thinking, you think anything which can produce such smooth sentences must be human. But, of course, that's not true," said the computer scientist.

Despite some impressive achievements made by today's LLMs, Valiant pointed to key limitations when compared to human thinking and knowledge.

"Whether these things pass the Turing test isn't so clear. The Turing test would be, like you interviewing a machine and really trying to find out whether it's a machine or not. And you're allowed to ask very careful questions. So, I suspect that you could figure out whether it's a machine or a human," he said. "Generally, I think my view of these large language models is that, for things which I have little expertise, like doing some home repairs, so I go to a large language model because it knows much more than I do. But in anything where you have some expertise, it's not as accurate. I mean, in passing tests, it's very hard to judge because many questions in many tests are all standardized, repeated on the web a million times. So, it's just doing a search. So, for any story like that, I believe, one doesn't have to take that seriously," Valiant added.

Intelligence of LLMs should not be overstated: Turing Award laureate